|

[Latest News]

Pennsylvania Senate Race Moves to 'Toss Up' as Dem Bob Casey Loses Ground: Cook Political Report

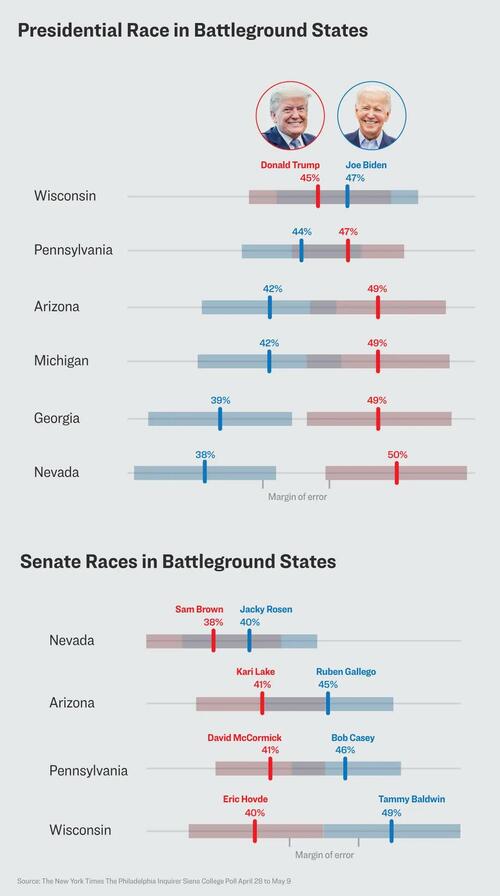

Pennsylvania’s Senate race has shifted from “lean Democrat” to a “toss up” as incumbent Sen. Bob Casey (D.) loses ground to Republican challenger David McCormick just two weeks before Election Day, according to the Cook Political Report.

The post Pennsylvania Senate Race Moves to 'Toss Up' as Dem Bob Casey Loses Ground: Cook Political Report appeared first on .

Published:10/21/2024 5:27:49 PM

|

|

[]

Too Weird to Check: Did Someone Bet $30M Just to Tweak Polymarket on Election?

Published:10/18/2024 6:01:28 PM

|

|

[848e52f8-7a38-5486-aebe-364c3db492f9]

'Blue Wall' Democrat aligns with Trump in new pitch to voters before election

Democratic Sen. Bob Casey has a new campaign ad in Pennsylvania that highlights his support for former President Trump's trade policies, which has been criticized by Republicans.

Published:10/18/2024 4:47:03 PM

|

|

[Uncategorized]

PA Dem Sen. Bob Casey Breaks With Biden, Agrees With Trump on Fracking in New AD

"Casey bucked Biden to protect fracking, and he sided with Trump to end NAFTA and put tariffs on China to stop them from cheating."

The post PA Dem Sen. Bob Casey Breaks With Biden, Agrees With Trump on Fracking in New AD first appeared on Le·gal In·sur·rec·tion.

Published:10/18/2024 3:42:45 PM

|

|

[71f01ac7-b4fe-59e2-8d37-269ba29401c5]

Fireworks expected in final Pennsylvania Senate debate in race that may decide chamber's majority

Democrat Sen. Bob Casey of Pennsylvania and Republican challenger Dave McCormick face off Tuesday in their final debate in a showdown that may decide if the GOP wins back the majority.

Published:10/15/2024 3:42:28 AM

|

|

[World]

Democratic senator attacks his Pennsylvania rival with an invented claim

Sen. Bob Casey falsely says Republican Dave McCormick “made clear” he’d slash Medicare and Social Security.

Published:10/14/2024 3:21:25 PM

|

|

[Elections]

Bob Casey Refuses To Revoke Endorsement of 'Inflammatory' Anti-Israel 'Squad' Member Summer Lee

Sen. Bob Casey refused Wednesday to revoke his endorsement of fellow Pennsylvania Democrat Summer Lee over her recent anti-Israel remarks, telling a Jewish group he has to instead "concentrate" on his own reelection campaign. Just two days earlier, on the one-year anniversary of Oct. 7, Lee issued a statement blaming Israel for Hamas's terror attack, rhetoric that the Jewish Federation of Pittsburgh said "inflamed our community."

The post Bob Casey Refuses To Revoke Endorsement of 'Inflammatory' Anti-Israel 'Squad' Member Summer Lee appeared first on .

Published:10/10/2024 12:18:46 PM

|

|

[Democrats]

Bob Casey Condemns Squad Member Summer Lee’s Anti-Israel Statements After Ignoring Them for a Year

Sen. Bob Casey, locked in one of the most closely watched Senate contests in the country, condemned fellow Pennsylvania Democrat Summer Lee over her anti-Israel statement on the anniversary of the October 7 attack on Israel. For the last year, Casey had resisted calls to denounce Lee’s previous anti-Israel remarks and had stood by his endorsement of her.

The post Bob Casey Condemns Squad Member Summer Lee’s Anti-Israel Statements After Ignoring Them for a Year appeared first on .

Published:10/8/2024 5:43:10 PM

|

[Markets]

Doug Casey Exposes The Global Elites' Plan For Feudalism 2.0... And How You Can Resist

Doug Casey Exposes The Global Elites' Plan For Feudalism 2.0... And How You Can Resist

Authored by Doug Casey via InternationalMan.com,

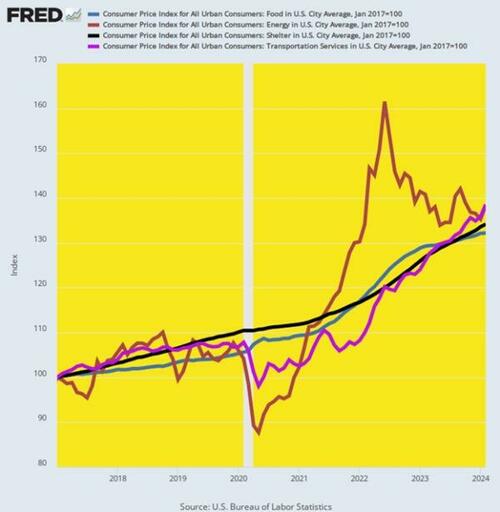

International Man: There’s little doubt the self-anointed elite are hostile to the middle class, which is on its way to extinction thanks to soaring inflation and taxation.

It seems they would like to implement a kinder and gentler version of feudalism.

What is really going on here, and what is the end game?

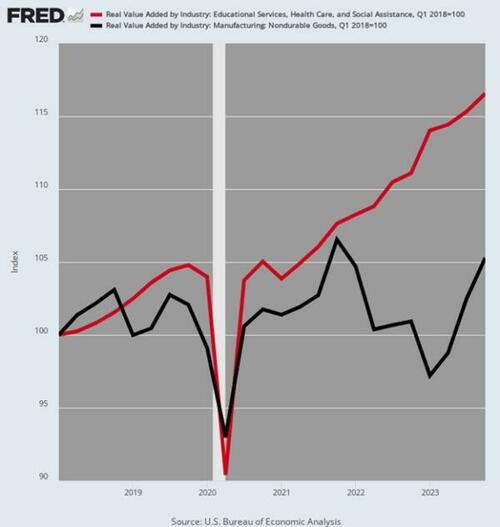

Doug Casey: The middle class, the bourgeoisie, emerged with the death of feudalism, the inception of the Renaissance, the Enlightenment, and finally, the Industrial Revolution.

“Middle class” has been given a bad connotation in recent times. Leftists want everybody to believe that the bourgeoisie is full of consumerist faults. They’re mocked for being concerned with material well-being and improving their status. The elites feel threatened by them. Unlike the lower class plebs, grunt workers who don’t expect more from life.

Bourgeoisie simply means city dweller. Starting in the late Middle Ages, city dwellers were independent, with their own trades and businesses. Living in towns got them out from under the control of the feudal warrior elites.

Cities became intellectual centers, where the growing wealth of the bourgeoisie—the middle class—gave them the leisure needed to develop science, technology, engineering, literature, and medicine. Universities expanded the idea of education beyond the realm of theology. Commerce and personal freedom attracted the best of the peasants, who rose to the middle class. Cities ended feudalism, a system whereby everyone was born into a class and occupation, and was expected to stay there for life, obligated to pay taxes—protection money—to his “betters”. The rise of the bourgeoisie didn’t suit the ruling classes, who liked dominating society.

Capitalism developed as the bourgeoisie became wealthy. The rest is well-known history, but the point must be made that the creation of the middle class, capitalism, and bourgeois values elevated peasants from poverty and created today’s world.

But, then and now, a certain percentage of the population wants to control everyone else. The types who go to Bilderberg, the World Economic Forum, CFR, and the like see themselves as elite new aristocrats who should dominate the others. Even though most of them came from the middle class, now that they’ve “made it,” they like to pull the ladder up. And if not eliminate, at least neuter or defang the remaining bourgeoisie.

So what’s the end game?

I think it might look something like the movie Rollerball. Keep the plebs entertained while the elite, in the form of a corporate aristocracy, controls society.

International Man: Yuval Harari is a prominent World Economic Forum (WEF) member.

He suggested that the elite should use a universal basic income, drugs, and video games to keep the “useless class” docile and occupied.

What is your take on these comments in the context of Feudalism 2.0?

Doug Casey: A nasty little fellow, Harari is what might be termed a court intellectual for the World Economic Forum. He’s there to provide an intellectual patina for the power members, who are basically the businessmen, politicos, and media personalities. They’re not thinkers or interested in ideas but philistines concerned with money and power. Harari gives them an intellectual framework to justify their actions and plans.

As far as his books are concerned, they amount to a lot of generic truisms, obvious observations, justifications of current trends, and a projection of how the world will evolve. As an author and thinker, he’s knowledgeable and intelligent but grossly overrated. He owes his success to promotion from the new wannabe aristocracy and their hangers on. He illustrates the advantages of being hooked up with power people.

Harari has gone from being just another college professor, living with his husband in Israel, to being an internationally famous multi-millionaire pundit.

He expects the “useless eaters” will be maintained on a subsistence basis until they die out. I’m not sure how much the Covid hysteria, followed by the vaccine, has to do with that. It’s becoming quite clear that Covid itself was an artificially constructed flu variant, mainly affecting the very old, very sick, and very overweight. The vaccine is useless in preventing Covid but has caused significant increases in morbidity and mortality among healthy recipients. Was it a trial run to cleanse the world of useless eaters?

I don’t know. But, based on what people like Stalin, Hitler, Mao, and Pol Pot—among many others—have done in recent years, I don’t think it’s out of the question. No doubt, the new aristocracy wants to cement themselves in place. They certainly don’t like rubbing shoulders with the hoi polloi when they visit Venice, Machu Pichu, and the like.

International Man: How does the WEF’s vision of “you will own nothing and be happy” compare to the previous feudal system of medieval Europe?

Doug Casey: Serfs, unlike slaves, had some rights; they owned tools and huts. But their position in society was fixed, they couldn’t easily move—rather like a medieval version of today’s 15-minute city. They had to recognize their betters, and not say anything challenging—like today’s increasingly draconian limits on free speech.

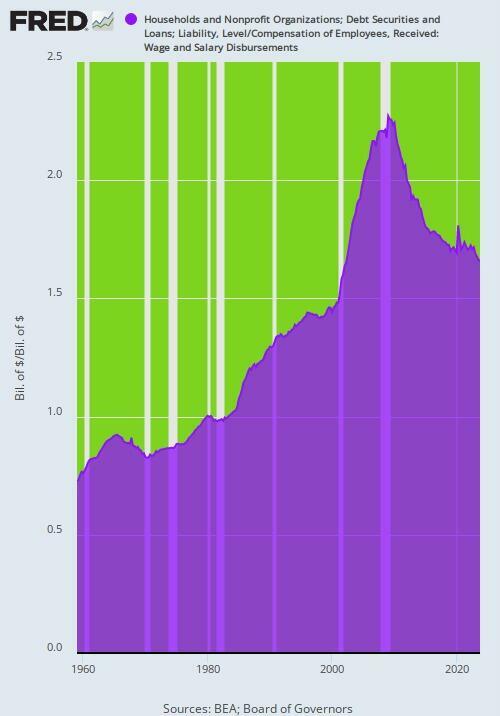

I expect that the gigantic amount of debt in society today will be the means of turning middle-class Americans into serfs. The lower classes are already welfare recipients who produce very little; they’ll soon be replaced by robots.

The better educated ones are buried under their college debts. But everybody is buried under growing credit card debt, auto debt, mortgage debt, and sometimes even tax debt.

If someone makes a lucky capital gain in the stock market or by selling his house, he might spend that money only to find that the government wants 20%, 30%, or 40% of the gain. So the gain, instead of a blessing, becomes a disaster in disguise.

Many people today are burdened by debt, living paycheck to paycheck. They’re barely getting by, under immense pressure to cover food and rent. They’d probably be quite willing to take a deal offering essentially “three hots and a cot,” a tiny apartment, internet, and some extra money to hang around Starbucks.

International Man: How do you see Feudalism 2.0 developing over the coming months and years?

What can be done to resist this agenda?

Doug Casey: Trends in motion tend to stay in motion until they reach some type of a crisis—when anything can happen. Let’s look at some economic systems, as spelled out by Karl Marx.

In Communism, the Marxist ideal, the State owns both the means of production (factories, farms, and such) as well as consumer goods (houses, cars, and theoretically, even your clothes). Mao’s China is as close as anyone’s come.

Socialism is a way station to Communism. The State owns the means of production, but individuals can still own consumer goods. There are lots of countries with socialist ideals, but no real socialist countries. Cuba probably comes closest.

Fascism is an economic system where both the means of production and consumer goods are privately owned, but they’re both 100% State-controlled. Most of the world’s countries are fascist. The word was coined by Mussolini; he meant it to describe the melding of the State, corporations, and unions.

Few people know that Marx coined the word “capitalism”. It’s a system where everything is both privately owned and privately controlled. There are no purely capitalist countries.

In feudalism, a lord owns everything but grants fiefs to subordinates. An aristocracy is supported by the plebs through taxation. Feudalism is based on the plebs providing service and taxes to the lord in exchange for “protection” from other lords.

Now for some pure speculation on my part.

Most of the world’s governments, including that of the US, are terminally bankrupt. They’ll prove unable to meet their obligations. Meanwhile, the prospect of wars, secessions, and crime is growing. I suspect wealthy corporations and individuals will wind up supplanting most traditional governments.

The result could be called neo-feudalism.

The average person is looking for someone or something to save him, to kiss everything and make it better, when times get tough. With governments bankrupt and dysfunctional, solvent and powerful individuals and corporations could take their place.

Harari and his pals want to see the plebs given a guaranteed annual income, a place to live, and entertainment until the useless eaters fade away. But it won’t be as neat as Harari’s wet dreams imagine. The world will be chaotic. We may be on our way to an idiocracy as well, where the populace is dumbed down so they don’t get dangerous ideas.

No matter how things sort out, I think we’re looking at a chaotic and dangerous situation in the near term.

I don’t see voting as a solution. Notwithstanding the differences between Harris and Trump, it just amounts to choosing the lesser of two evils, which in this case would certainly be Trump. But even if you elected Mises, Hayek, Ron Paul, or Harry Browne, I’m afraid the tide of history would wash them away.

In any event, your vote doesn’t really count. Or perhaps I should say it counts about as much as a grain of sand on a beach with hundreds of millions of grains of sand. And even then, as Stalin said, it’s not who votes that counts. It’s who counts the votes.

What can you do to resist the shape of things to come?

It’s an uphill fight because if you’re liberty-oriented, you’re part of a tiny minority at odds with the views of most of your fellow citizens, who’ve been indoctrinated by years of schooling, media, and entertainment. Collectivist memes are cemented in their minds. And when they talk to their contemporaries, they tend to mutually reinforce their beliefs.

When you’re in a group, it can be dangerous to have different beliefs, in much the same way that it’s dangerous for a chicken in a flock to have a feather out of place. The other chickens will peck it to death. Reigning ideas tend to be brutally enforced.

What can you do about this?

Other than trying to maintain your personal integrity, there’s not much you can do to roll back the tsunami. There wasn’t much that a freedom-loving Russian could do in 1917, a freedom-loving German could do in 1933, or a freedom-loving Cuban could do in 1959. Or a freedom-loving Venezuelan today.

The best you can do is to try to save yourself, your family, and your like-minded friends. Changing society for the better is a long shot. Although I hope Milei in Argentina proves me wrong.

International Man: What do you suggest individuals do to ensure they don’t become modern serfs if Feudalism 2.0 emerges?

Doug Casey: There are two types of freedom: physical and financial.

From a physical point of view, it’s important not to be tied down the way a serf might be. You don’t want all your possessions to be in one place where they’re easily controlled by the powers that be. Don’t act like a plant. Staying rooted in one place is not an optimum survival strategy for a human in tough times.

The powers that be are interested in controlling other people. It’s best to be a moving target, which makes you much harder to hit.

This is a problem for those of us who think that the US is still the land of the free. It’s not. It’s been devolving for decades. My guess is that over the next few years, perhaps starting with this election, the US will evermore closely resemble the other 200 nation-states that cover the face of the globe like a skin disease.

The single most important thing you can do is internationalize and make sure that all your assets aren’t in one bailiwick, under the control of one government.

From a financial point of view, it gives you the freedom to travel and move, especially with the coming FX controls and CBDCs. Use gold and Bitcoin. You should already have a good stash of both. If you don’t, it’s not too late to start accumulating and transferring assets into them.

* * *

The months and years ahead will be politically, economically, and socially volatile. What you do to prepare could mean the difference between suffering crippling losses and coming out ahead. That’s precisely why, legendary investor and NY Times best-selling author Doug Casey just released this urgent report on how to survive and thrive. Click here to download the PDF now.

|

[Entertainment]

Diddy and Kim Porter’s Kids Break Silence on Death and Memoir Rumors

The children of Sean “Diddy” Combs and the late Kim Porter are shutting down the speculation surrounding their mother’s death.

Christian Combs, 26, and twins Jessie Combs and D’Lila Combs, both...

The children of Sean “Diddy” Combs and the late Kim Porter are shutting down the speculation surrounding their mother’s death.

Christian Combs, 26, and twins Jessie Combs and D’Lila Combs, both...

Published:9/25/2024 6:19:12 AM

|

|

[Politics]

The Truth About Springfield, Ohio

This week, The Washington Post's Libby Casey, Rhonda Colvin and James Hohmann sit down with Senior Video Journalist Jorge Ribas, who just got back from Springfield, Ohio – where the neighborhood pets are decidedly not being eaten. The crew dives into why former president Donald Trump continues to lean into anti-immigrant rhetoric and how Springfield residents feel about the sudden wave of national attention on their city.

Plus, a new Washington Post poll shows Trump and Vice President Harris are essentially tied in Pennsylvania – the key swing state that could determine the outcome of the 2024 presidential election.

And does the Teamsters' decision not to endorse a candidate matter?

Published:9/19/2024 3:19:48 PM

|

[Markets]

US Secures Convictions, Guilty Pleas As CCP-Directed Spying Exposed

US Secures Convictions, Guilty Pleas As CCP-Directed Spying Exposed

Authored by Eva Fu, Catherine Yang via The Epoch Times (emphasis ours),

For years, Beijing has been deepening its hold on America, drawing intelligence from the U.S. government while silencing critics with the help of agents embedded in U.S. society.

The United States is now hitting back—and seeing results, according to experts.

Illustration by The Epoch Times, Shutterstock Illustration by The Epoch Times, Shutterstock

In early September, prosecutors arrested Linda Sun, former aide to New York Gov. Kathy Hochul, accusing her of acting on behalf of Beijing in exchange for gifts and payouts valued in millions of dollars to her family.

There has also been a marked increase in the rate of convictions or pleas in recent months. The Justice Department has brought forth dozens of CCP-directed espionage and foreign agent cases in the past four years, resulting in at least 13 convictions or pleas, with more than half of those taking place this year—including three in the past month. an Epoch Times review of the court records show.

On Aug. 6, a Chinese American scholar posing as a pro-democracy activist was convicted by a jury for spying on dissidents for the CCP.

On Aug. 13, a U.S. army intelligence analyst from Texas pleaded guilty to selling military secrets to the CCP.

On Aug. 23, a software engineer who worked two decades at Verizon pleaded guilty to gathering intelligence on countless dissidents and organizations targeted by the CCP since 2012.

Case documents reveal a broad range of criminal actions taken by agents, often different from what most may imagine to be spying. Beyond industrial espionage and covert influence campaigns, the regime has directed hacker rings, including a group that was charged and sanctioned this year for waging a 14-year campaign on the United States.

“I feel that our nation must take every opportunity to stop these threats,” Rep. Don Bacon (R-Neb.), chair of the cybersecurity subcommittee for the House Armed Services Committee, told The Epoch Times, noting that the U.S. intelligence community has identified Beijing as the number one threat to the United States.

Bacon has experienced Chinese espionage attempts firsthand. Last year, he was hacked by CCP-linked hackers who also broke into email systems of State and Commerce department officials and dozens of other groups.

“Can we ever say that whatever actions we are taking are enough? I don’t believe so as the threats are increasing in frequency, sophistication, and national security impact,” Bacon said.

Who Are the Spies?

The CCP has long targeted people of Chinese descent—of whom there are more than 60 million people outside China—as potential assets in its intelligence operations.

Among those charged by the DOJ in the foreign agent cases are officials of the CCP’s top intelligence gathering agency Ministry of State Security (MSS), Chinese citizens traveling to the United States under false pretenses, hackers residing in Asian countries; as well as asylees, permanent residents, and U.S. citizens of Chinese descent.

Some reside in the United States while dozens of others charged are known to reside in China, and will now face arrest if they ever set foot on American soil.

There are also many who are U.S. citizens that aren’t of Chinese descent. They include active military members, former law enforcement, and experts in competitive fields.

Linda Sun, a former aide to New York Gov. Kathy Hochul, and her husband, Chris Hu, exit the federal court in Brooklyn after Sun was charged with acting on behalf of the Chinese Communist Party, in New York City on Sept. 3, 2024. Kent J. Edwards/Reuters Linda Sun, a former aide to New York Gov. Kathy Hochul, and her husband, Chris Hu, exit the federal court in Brooklyn after Sun was charged with acting on behalf of the Chinese Communist Party, in New York City on Sept. 3, 2024. Kent J. Edwards/Reuters

The CCP engages in what experts such as Casey Fleming, chair and CEO of risk consultancy BlackOps Partners, describe as “unrestricted warfare,” meaning there are no legal, ethical, or moral lines it will not cross to pursue its objectives. It capitalizes on one’s baser instincts—greed, pride, lust, shame—to recruit assets.

“Number one, it’s money. Number two, it’s ego. Number three, it’s blackmail,” Fleming, who advises the DOJ, FBI, and Congress on the CCP threat, told The Epoch Times.

In May, two New Yorkers pleaded guilty in an indictment that charged seven, including MSS officials in China, for trying to coerce an American family to go back to China to be imprisoned by the CCP.

The defendant had harassed the Chinese man with bogus lawsuits and told the victim it “really is a drop in the bucket for a country to spend $1 billion” to achieve what the CCP ordered, promising “endless misery” for the victim, he said. “It is definitely true that all of your relatives will be involved.”

In January, a former U.S. Navy sailor was sentenced to 27 months for giving sensitive military information to the CCP over the course of almost two years, in return for about $14,000.

“I mean, he’s paying me so I was like, okay, I’ll just do whatever he says,” the sailor told the FBI in an interview, describing the job as “easy money.”

Several other cases involving former military members include an indicted Navy sailor and army soldier, and a former U.S. army helicopter pilot who pleaded guilty.

Last month, Sgt. Korbein Schultz, an army intelligence analyst with the First Battalion of the 506th Infantry Regiment at Fort Campbell, pleaded guilty to sending military secrets to a CCP agent, receiving $42,000 in return.

Beginning around June 2022, Schultz began sending sensitive military files to an unnamed conspirator working for the CCP. Schultz received payment up to $1,000 per document in return.

A month into the partnership, Schultz told the conspirator he would like to turn the relationship into a long term one, according to the indictment. He provided materials including details about U.S. precision rockets, their performance, and how they would be used. He also shared manuals and technical data of several US aircraft, documents referencing the Chinese military, and documents related to the U.S. military forces in the Indo-Pacific.

(left) Zhu Yong returns to Brooklyn Federal Court for a trial in New York City on May 31, 2023. America's first federal trial got underway over China's alleged attempts to forcibly repatriate its citizens under a campaign known as Operation Fox Hunt. (right) Congying Zhen leaves Brooklyn Federal Court in New York City on May 31, 2023. Yuki Iwamura/AFP via Getty Images (left) Zhu Yong returns to Brooklyn Federal Court for a trial in New York City on May 31, 2023. America's first federal trial got underway over China's alleged attempts to forcibly repatriate its citizens under a campaign known as Operation Fox Hunt. (right) Congying Zhen leaves Brooklyn Federal Court in New York City on May 31, 2023. Yuki Iwamura/AFP via Getty Images

The conspirator asked for information of higher levels of classification as the partnership progressed and promised higher payments for more exclusive information.

“I hope so! I need to get my other BMW back!” Schultz wrote in response to the promise of higher pay. He told the conspirator he wished he could be “Jason Bourne,” and brought up the idea of moving to Hong Kong so he could work for the conspirator in person.

Four months into the partnership, the conspirator raised the suggestion of recruiting another service member who had access to higher classified information, and Schultz set out to do so over the next few months.

In another case, two men who pleaded guilty in July to acting as CCP agents had tried to bribe an IRS agent to target Falun Gong practitioners. Falun Gong, also known as Falun Dafa, is a spiritual practice with five meditative exercises and teaches the principles truth, compassion, and tolerance. Since 1999, the CCP has sought to “eradicate” the practice in a whole-of-state approach.

Unbeknownst to the two men, the IRS agent was an undercover FBI agent, who took the $5,000 bribe and offer of $50,000 total as evidence in their case. In a recorded call, one of the two men, John Chen, said the money came from Chinese authorities, who were “very generous” when it came to their goal to “topple” Falun Gong.

Insider Threat

Fleming says some “insider threats” were planted in companies and the military decades ago, pointing to the former Verizon software engineer who had been sending the CCP data on Chinese dissidents in America since at least 2012 as an example, and companies are now beginning to recognize the long term effort with these high profile cases.

Some recruits are attracted by the money, others prestige. “They’ve done that to Harvard professors and so on: ‘We’re going to let you set up a sister lab in China ... and you’ll be the head of the lab,‘” Fleming said as an example. “’You’re so smart and accomplished. We‘d like you to do a white paper.’”

Fleming said he receives a few of these offers a year himself. The most recent one, from Hong Kong, came just months ago. He promptly deleted it. “I know what’s going on, but many people don’t, and they take $7,500 to do a white paper,” Fleming said.

Read the rest here...

|

|

[fef4bc47-bbbc-5220-8deb-664432cb0ac6]

Tom Cruise performed unforgettable Olympics stunt without pay, insisted on no stunt double

Casey Wasserman, the LA28 president and chairperson, explained how Tom Cruise became involved in the jaw-dropping stunt performed at the 2024 Paris Olympics.

Published:9/13/2024 2:39:23 PM

|

|

[Elections]

Bob Casey Says He Loves Hunters and Fishermen. Why Is He Blocking Them From His Campaign Ads?

Sen. Bob Casey bills himself as a champion of Pennsylvania’s hunters and fishers, calling the outdoor sports a "part of Pennsylvania’s heritage." But on Facebook, the Democrat is blocking sportsmen in the commonwealth from seeing his campaign ads.

The post Bob Casey Says He Loves Hunters and Fishermen. Why Is He Blocking Them From His Campaign Ads? appeared first on .

Published:9/13/2024 12:35:50 PM

|

[Markets]

McCormick Outlines 'Playbook' In Tight Pennsylvania Senate Race

McCormick Outlines 'Playbook' In Tight Pennsylvania Senate Race

Authored by Philip Wegmann via RealClearPolitics,

With August in the rear-view mirror, Senate candidate Dave McCormick (R-PA) admits he never really made much of “brat summer,” the amorphous Gen Z meme that no one can exactly define but that Vice President Kamala Harris has adopted while in pursuit of younger voters.

A catch-all term for “cool” that is also sort of kitsch, “brat” is one of the vibes that Harris has cultivated amidst a slow policy rollout to capture the imagination of voters and catapult herself in front of former President Donald Trump in the polls.

The term is trendy, meme-able, and, according to the Republican running for Senate in Pennsylvania, “not serious” at a moment when inflation lingers, the cost of living creeps ever higher, and the southern border remains porous. McCormick said of his pitch to voters during an interview with RealClearPolitics, “People are thirsting for a serious discussion about the future of the country.”

Plenty of Republicans say they want to focus on policy, not vibes, complaining that Democrats have gotten an early pass on substance from the moment Harris replaced President Biden as the nominee. Many have struggled all summer to reframe the conversation.

But while the GOP has lost ground nationally this summer, McCormick is gaining ground in Pennsylvania.

He tied incumbent Sen. Bob Casey in a recent CNN/SSRS poll at 46% and pulled within three points of the Democrat, who leads by just 48% to 45% in the RealClearPolitics Average, after trailing in some polls by as much as double digits earlier this year.

Things are not as encouraging atop the ticket for Republicans. Harris has not only made up the ground that Biden lost to Trump in the critical swing state, but she is now tied with him in Pennsylvania. Nineteen electoral college votes, and possibly the White House, hang in the balance there. It is quite the turnaround.

Trump had successfully defended a slim lead for seven straight months. Biden never surpassed the Republican a single time in the RCP Average. Harris pulled ahead of him in just two weeks as Trump slowly switched gears to attack his new rival. The nicknames tell the story. None of the early ones stuck.

“Laffin Kamala” gave way to “Lyin’ Kamala” and finally “Crazy Kamala” throughout the summer as Republicans griped that the “Harris Honeymoon” wouldn’t end. When he wasn’t talking about Biden’s exit, Trump struggled to define Harris. For his part, McCormick did it in less than three days.

“This is what voters down ballot will be seeing in every Senate race from [Nevada] to [Pennsylvania] until November,” a Republican operative texted RCP less than 72 hours after the Biden switch for Harris. It was a link to a 90-second McCormick ad that was about to drop online. The Republican doesn’t say a single word in the spot. Instead, the GOP campaign cut an ad to give Harris and Casey the spotlight.

“Kamala Harris is inspiring and very capable. The more people get to know her, they’re going to be particularly impressed by her ability,” Casey says at the beginning of the ad before a supercut follows of the vice president offering some of her most progressive policy prescriptions like ending the Senate filibuster, banning fracking, decriminalizing illegal immigration, and mandating gun buyback programs.

“There were no Republican voiceovers, no dark lights or ominous language,” McCormick said of the ad. “It was just her in her own words, saying what she believes. And then it was Senator Casey saying, she’s ready to be president today.”

The quotes from Harris were from her failed 2020 campaign for the Democratic nomination. The goal was guilt by association: Tying Casey to Harris, who was once rated the most liberal member of the Senate.

A spokeswoman for the Casey campaign responded by saying that “McCormick is grasping at straws” before noting that the senator has voted against fracking bans already and supported the bipartisan Senate border bill, which Harris pledges to sign into law if elected. The spokeswoman added that “Casey is actually delivering for the Commonwealth by holding greedy corporations accountable, lowering costs, and supporting our veterans and seniors.”

The incumbent certainly hasn’t held the Democratic nominee at arm’s length. In Pittsburgh earlier this week, Casey told a crowd that Harris “has proven” she is ready not just to be “our commander in chief” but is “ready in these next 60 plus days to take on Donald Trump and win.”

Republicans are still thrilled with the McCormick blueprint. Trump delegate Christian Ziegler called it “one of the most brutal and effective ads I’ve seen in a while” before suggesting that the advertisement should be played “every moment from now until November.” That strategy did not materialize immediately, at least on the national stage. Before Trump dubbed his opponent “Comrade Kamala,” her campaign quietly disavowed her more liberal positions in written statements to the press.

During a CNN interview at the end of the summer, Harris finally disavowed her previous calls to ban fracking and decriminalize border crossings, stressing, however, that “my values have not changed.”

McCormick doesn’t make much of those denials, of course. He again defined the race during the RCP interview by asking if voters “are willing to take the risk that she actually believes in all the radical stuff she once said she believed in.” His opponent, he added, “has been a sure vote for the policies of Kamala Harris.” He said he will leave it to other Republicans to run their own races, but at least for him, McCormick said, running a tape of vintage Harris “is the playbook in Pennsylvania.”

Other Republicans wish Trump would have copied and pasted the McCormick attack earlier. “The Trump team got caught flat-footed. That’s a fact,” a Republican operative close to that campaign told RCP. “They lacked the ability to message properly, or to get in front of this, or to get the president on message in a reasonable timeframe. Weeks went by before the ship could be righted again.”

Trump and Harris will meet for the first time on a debate stage next week in Philadelphia, and the former president previewed what the current vice president is likely to encounter. “Communism is the past. Freedom is the future,” Trump told the Economic Club of New York Thursday while hitting his opponent for embracing price controls. “It is time to send ‘Comrade Kamala Harris’ back home to California.”

McCormick and Casey, for their part, have already agreed to a debate next month in a state that is seen as key not only to Republican chances of retaking the Senate but also to winning back the White House.

“Pennsylvania is going to be super close,” McCormick predicted. “I suspect it’ll be super close for President Trump. I think he will win, but it is going to be a close race, and I think he knows it. That’s why he is spending so much time here.”

Defining the opposition will be key for Republicans in the state and nationally.

“I’m pretty confident that when people see who Kamala Harris is, President Trump will prevail,” McCormick said of the contrast. “And I’m hoping that if I continue to run a strong campaign, and they see how weak and liberal Bob Casey is, that I will prevail.”

Thus ends “brat summer” for McCormick. The confusing term defies definition. The poll numbers do not. He is the candidate who has surged to a tie in Pennsylvania and likely represents the best chance Republicans have to retake the Senate.

|

|

[Politics]

Debate prep

On this week's episode, The Washington Post's Libby Casey, JM Rieger and James Hohmann preview next week's debate, and what Vice President Harris and former president Trump need to do in what could be the final presidential debate of the cycle.

Plus, abortion – and Trump's stance on an abortion ballot initiative in his home state of Florida – has become a big part of the 2024 conversation.

And what's the latest on Trump's legal troubles? The delay tactics continue.

Published:9/5/2024 4:13:05 PM

|

|

[3ab670ca-e4eb-5745-9935-6adc39898726]

McCormick seizes on Pennsylvania Senate race gap, laying border blame on Casey

A new ad from Dave McCormick hits Democrat Sen. Bob Casey over a lack of action to secure the southern border as fentanyl continues to enter the U.S. illegally.

Published:9/3/2024 3:10:11 PM

|

|

[Entertainment]

‘Slingshot’ sets its mind games in outer space

Casey Affleck stars as an astronaut questioning reality.

Published:8/29/2024 5:13:51 AM

|

[Markets]

Target CEO Says Retailers Can't Price Gouge In Competitive Industry

Target CEO Says Retailers Can't Price Gouge In Competitive Industry

Authored by Andrew Moran via The Epoch Times (emphasis ours),

In a highly competitive environment, retailers cannot engage in the practice of price gouging, said Target CEO Brian Cornell.

The entrance of a Target store in Ellicott City, Md., on March 24, 2024. Madalina Vasiliu/The Epoch Times The entrance of a Target store in Ellicott City, Md., on March 24, 2024. Madalina Vasiliu/The Epoch Times

Vice President Kamala Harris recently proposed the first-ever federal price gouging ban on the food and grocery industries. Harris and other White House officials have claimed that businesses are taking advantage of the economic climate and overcharging customers by sharply raising prices to pad their profits.

Cornell dismissed these assertions, telling CNBC’s “Squawk Box” that Target is “in a penny business.”

“It’s a very competitive space,” he said, explaining that the industry faces small profit margins.

The Target executive noted that retailers must be responsible to consumers because they possess the power to seek lower prices and other products by scanning their phones or visiting other stores.

The Minnesota-based company has joined the crowd of other retailers that have responded to shoppers’ concerns.

In May, Target announced it would cut prices on about 5,000 essential everyday items, such as diapers, paper towels, fresh fruit and vegetables, coffee, and pet food, in order to bolster sales and generate higher traffic.

Discounts have appeared successful, with in-store and web traffic rising 3 percent in the previous quarter. Target’s net profit margin—the percentage of profit a business produces from its total revenue—was up 2.4 percent year over year.

While many retail outfits attempting to bring prices down amid more cost-conscious shoppers and slowing consumer demand, Walmart says inflation remains “more stubborn,” particularly for dry groceries and processed foods.

“We have less upward pressure, but there are some that are still talking about cost increases, and we’re fighting back on that aggressively because we think prices need to come down,” Walmart CEO Doug McMillon said on a second-quarter earnings call last week.

Debate About Price Gouging

Earlier this year, the current administration launched a joint Federal Trade Commission and Department of Justice task force to combat what officials say is illegal and unfair corporate pricing for food, rent, pharmaceuticals, and other goods and services.

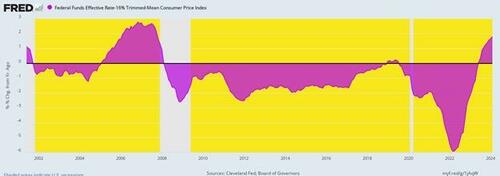

The debate persists as to whether corporate price gouging has kept inflation sticky and stubborn—the Federal Reserve Bank of Atlanta’s sticky-price Consumer Price Index (CPI) is up 4.1 percent year over year.

Fed officials and economists have expressed doubt that corporate gouging has contributed to the rampant price inflation over the last few years.

This past spring, San Francisco Fed economists published a paper that concluded the alleged price gouging was not a main factor for the inflationary pressures that have been prevalent since 2021.

“An increase in pricing power would be reflected in price-cost markups, leading to higher inflation; likewise, a decline in pricing power and markups could alleviate inflation pressures,” they wrote.

“The aggregate markup across all sectors of the economy, which is more relevant for inflation, has stayed essentially flat during the post-pandemic recovery.”

Chicago Fed President Austan Goolsbee told CBS’s “Face the Nation” that various “dynamics at play” can impact prices and wages.

“If you look at any given moment, that markup sort of the difference between what’s happening to prices and what’s happening to costs that can vary a lot over the business cycle,” Goolsbee said.

“So, I just caution everybody over concluding from any one observation about markups.”

The Producer Price Index (PPI)—a metric that measures prices paid for goods and services by businesses—has outpaced the CPI by 25 percent to 20 percent since January 2021.

Fed Chair Jerome Powell, too, dismissed the idea that corporate price gouging is contributing to inflation.

“It’s been very hard to track a connection with earnings and things,” he said in July when he appeared before the House during his semiannual monetary policy report.

This year, lawmakers have stepped up efforts to fight what they deem is price gouging and shrinkflation—the act of raising prices by shrinking product sizes.

In February, Sen. Sherrod Brown (D-Ohio) introduced legislation to grapple with these issues, alleging that “corporations used supply shocks from the pandemic and war in Ukraine as an excuse to raise prices, and they keep raising them.”

In a letter to Kroger CEO Rodney McMullen, Sens. Elizabeth Warren (D-Mass.) and Bob Casey (D-Penn.) accused the supermarket chain of price gouging and hurting consumers.

“It is outrageous that as families continue to struggle to pay to put food on the table, grocery giants like Kroger continue to roll out surge pricing and other corporate profiteering schemes,” the lawmakers wrote.

A growing number of companies have embraced electronic shelving labels as part of broader dynamic pricing strategies.

Proponents say electronic shelving labels can lower customers’ prices over time because retailers can automate pricing updates and exploit the most recent data on a centralized pricing platform. Critics claim they will increase prices for consumers because dynamic pricing could help companies charge more for a product during times of the day when it is in higher demand.

Meanwhile, the public seems to support the flood of price-gouging claims.

A February Navigator Research study highlighted that 85 percent of Americans say “corporations being greedy and raising prices to make record profits” is a cause of inflation. A June 2023 YouGov survey showcased similar results, with most Americans blaming “large corporations seeking maximum profits” for high inflation.

|

|

[World]

The Democratic Party's party

This week, The Washington Post's Libby Casey, Rhonda Colvin and James Hohmann sit down just after the conclusion of Vice President Kamala Harris's acceptance speech at the Democratic National Convention. The crew discusses the vibes of the convention, how different it felt from the July Republican National Convention, and the policies Democrats put at the heart of their 2024 campaign. Then, the team goes through their favorite moments of the week.

Plus, were Democrats more effective than Republicans at harnessing the power of influencers and social media? The Washington Post Universe's Carmella Boykin and Joseph Ferguson join the show to discuss what's going on in the DNC's "influencer lounges" and beyond.

Published:8/23/2024 12:46:41 AM

|

|

[Politics]

Weird

On this week's episode, The Washington Post's Libby Casey, James Hohmann and JM Rieger are joined by The Washington Post Universe's Joseph Ferguson to discuss former president Donald Trump's appearance at the National Association of Black Journalists convention, and his attacks on Vice President Harris's racial identity.

Later, the crew dives into Republican vice-presidential nominee JD Vance's continuing controversy over past comments he's made about women who don't have children. And who will Harris pick as her own vice-presidential nominee? We're like to find out in the next few days.

Published:8/1/2024 4:21:36 PM

|

|

[Climate Politics]

Team Harris Swears Kamala Won’t Kill Fracking, But Will Anyone Believe Them?

Bob Casey and Kamala Harris have opposed Pennsylvania energy every step of the way, and their anti-fossil fuel agenda would be disastrous for our commonwealth and the 600,000 workers who rely on the energy sector for a paycheck

Published:8/1/2024 12:01:41 AM

|

[Markets]

Doug Casey On 'Your Enemy, The Deep State'

Doug Casey On 'Your Enemy, The Deep State'

Authored by Doug Casey via InternationalMan.com,

A lot of people would like political solutions to their problems, i.e., getting the government to make other people do what they want. People with any moral sense, however, recognize that can only create more problems.

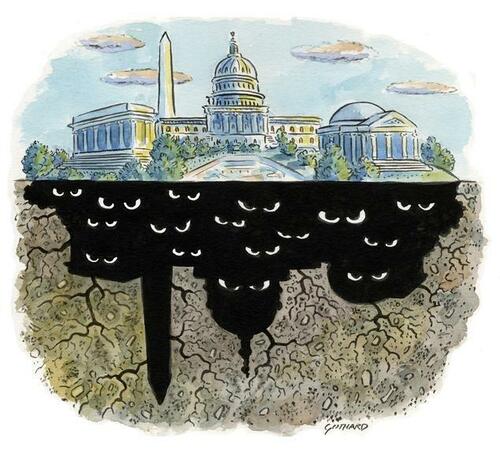

Clever players, therefore, use the government as a tool but do so from behind a curtain. They know that it’s more effective, and a lot safer, to pull the puppet’s strings from offstage. Sometimes, they step into the limelight, depending on the circumstances and the depth of their personal narcissism. But they’re all about two things: Power and money. Call them the Deep State.

Once a country develops an entrenched Deep State, only a revolution or a dictatorship can turn things around. And probably only in a small country.

The American Deep State is a powerful informal network which controls most institutions. You won’t read about it in the news because it controls the news. Politicians won’t talk about it or even admit that it exists. That would be like a mobster discussing murder and robbery on the 6 o’clock news. You could say the Deep State is hidden, but it’s hidden in plain sight.

The Deep State is involved in almost every negative thing that’s happening right now. It’s essential to know what it’s all about.

The State

The Deep State uses and hides behind the State itself.

Even though the essence of the State is coercion, people have been taught to love and respect it. Most people think of the State in the quaint light of a grade school civics book. They think it has something to do with “We the People” electing a Jimmy Stewart character to represent them. That ideal has always been a pernicious fiction because it idealizes, sanitizes, and legitimizes an intrinsically evil and destructive institution, which is based on force. As Mao once said, political power comes out of the barrel of a gun.

The Deep State

The Deep State itself is as old as history. But the term “Deep State” originated in Turkey, which is appropriate since it’s the heir to the totally corrupt Byzantine and Ottoman empires. And in the best Byzantine manner, our Deep State has insinuated itself throughout the fabric of what once was America. Its tendrils reach from Washington down to every part of civil society. Like a metastasized cancer, it can no longer be easily eradicated.

In many ways, Washington models itself after another city with a Deep State, ancient Rome. Here’s how a Victorian-era freethinker, Winwood Reade, accurately described it:

“Rome lived upon its principal till ruin stared it in the face. Industry is the only true source of wealth, and there was no industry in Rome. By day the Ostia road was crowded with carts and muleteers, carrying to the great city the silks and spices of the East, the marble of Asia Minor, the timber of the Atlas, the grain of Africa and Egypt; and the carts brought out nothing but loads of dung. That was their return cargo.”

The Deep State controls the political and economic essence of the US. This is much more than observing that there’s no real difference between the left and right wings of the Demopublican Party. Anyone with any sense (that is, everybody except the average voter) knows that although the Republicans say they believe in economic freedom (but don’t), they definitely don’t believe in social freedom. And the Democrats say they believe in social freedom (but don’t), but they definitely don’t believe in economic freedom.

Who Is the Deep State?

The American Deep State is a real but informal structure that has arisen to not just profit from but control the State.

The Deep State has a life of its own, like the government itself. Within the government, it’s composed of top-echelon employees of a dozen Praetorian agencies, like the FBI, CIA, and NSA…top generals, admirals, and other military operatives…long-term congressmen and senators…and directors of important regulatory agencies.

But the Deep State is much broader than just the government. It includes the heads of major corporations, all of whom are heavily involved in selling to the State and enabling it. That absolutely includes Silicon Valley, although those guys at least used to have a sense of humor, evidenced by their defunct “Don’t Be Evil” motto.

It also includes the top people in the Fed, and the heads of the major banks, brokers, and insurers. Add the presidents and many professors at top universities, which act as Deep State recruiting centers… top media figures, of course… and many regulars at things like the WEC, Bohemian Grove, and the Council on Foreign Relations. They epitomize the status quo, held together by power, money, and propaganda.

Altogether, I’ll guess these people number a couple thousand. You might analogize the structure of the Deep State to a huge pack of dogs. The people I’ve just described are the Top Dogs.

But there are hundreds of thousands more who aren’t at the nexus but who directly depend on them, have considerable clout, and support the Deep State because it supports them. This includes many of the wealthy, especially those who got that way thanks to their State connections… the 1.5 million people who have top secret clearances (that’s a shocking but accurate number), plus top players in organized crime, especially the illegal drug business, little of which would exist without the State. Plus mid-level types in the police and military, corporations, and non-governmental organizations.

These are what you might call the Running Dogs.

Beyond that are the scores and scores of millions who depend on things remaining the way they are, like the 50%-plus of Americans who are net recipients of benefits from the State—the 70 million on Social Security, the 90 million on Medicaid, the 50 million on food stamps, the many millions on hundreds of other programs, the 23 million government employees and most of their families. In fact, let’s include the many millions of average Joes and Janes who are just getting by.

You might call this level of people, the vast majority of the population, Whipped Dogs. They both love and fear their master; they’ll do as they’re told and roll over on their backs and wet themselves if confronted by a Top Dog or Running Dog who feels they’re out of line. These three types of dogs make up the vast majority of the US population. I trust you aren’t among them. I consider myself a Lone Wolf in this context and hope you are, too. Unfortunately, however, dogs are enemies of wolves and tend to hunt them down.

The Deep State is destructive, but it’s great for the people in it. And, like any living organism, its prime directive is: Survive! It survives by indoctrinating the fiction that it’s both good and necessary. However, it’s a parasite that promotes the ridiculous notion that everyone can live at the expense of society.

Is it a conspiracy headed by a man stroking a white cat? I think not. It’s hard enough to get a bunch of friends to agree on what movie to see, much less a bunch of power-hungry miscreants bent on running everyone’s lives. But, on the other hand, the Top Dogs all know each other, went to the same schools, belong to the same clubs, socialize, and, most importantly, have common interests, values, and philosophies.

The American Deep State rotates around the Washington Beltway. It imports America’s wealth as tax revenue. A lot of that wealth is consumed there by useless mouths. And then, it exports things that reinforce the Deep State, including wars, fiat currency, and destructive policies. This is unsustainable simply because nothing of value can come out of a city full of parasites.

A SOLUTION?

Many Americans undoubtedly believe Donald Trump is a solution to what ails the US. They think he’s a maverick who will smash the Deep State. That’s understandable since he’s a cultural conservative. He wants the country to resemble the happy days of yesteryear, when Mom, apple pie, and Chevrolets were more important than Diversity, Equity, and Inclusion. He’s a nationalist, a traditionalist, and business-oriented. That’s all very well. But irrelevant to the Deep State.

The problem is that Trump will almost necessarily surround himself with Deep State players; they’re really all there is in and around the Washington Beltway, Wall Street, Academia, or the media. He’s not about to abolish government agencies (although he might prune a few) and fire scores of thousands of government employees. His lack of a philosophical core will guarantee that instead of trying to abolish the State (in the manner of Millei or Ron Paul), he’ll just use it in ways he thinks are righteous. The proof of that is the trillions of new government spending and deficits he approved of when he was in power. He’ll spend trillions more if he’s re-elected. And all of it will feed the Deep State.

On the bright side, if Trump is elected, his rhetoric will be less objectionable than Kamala’s. There will probably be less overtly disastrous legislation enacted, and some regulations will likely be cut back.

On the not-so-bright side, I’m afraid the Democrats could win come November. They have control of the apparatus of the State, and they absolutely don’t want to give it up. The Deep State will be just fine if Trump wins, of course. But nobody wants to take a chance; there might be some broken rice bowls. He might turn into a loose cannon. So the Deep State, the Establishment, will be even fatter and happier if the Dems take control.

That’s what they’ll work for. And that’s the way to bet.

* * *

It’s clear there are some ominous social, political, cultural, and economic trends playing out right now. Many of which seem to point to an unfortunate decline of the West. That’s precisely why legendary speculator Doug Casey and his team just released this free report, which shows you exactly what’s happening and what you can do about it. Click here to download it now.

|

[Markets]

Manufactured Uncertainty

Manufactured Uncertainty

Authored by Jeff Thomas via InternationalMan.com,

For many years, I’ve described a period that I envisaged to be in the future, in which much of what was considered “normal” would change dramatically.

The borders of some countries would change. The types of governments that ruled over them would change. In some cases, they would morph slowly into new entities; in others they would change suddenly.

Much of the wealth in the world would change hands. Enormous fortunes would be made by a few, whilst the life savings of countless others would be lost.

Large numbers of people would be on the move. Many would be refugees, hoping to escape poverty and/or oppressive governments. Others would be opportunists – those who look for the positives in periods of global upset.

Above all, this would be a period of change.

For most people, this would one day be looked back upon as previous generations looked back on the World Wars or the Great Depression – a time of devastation, in which many people lost all or most of what they had had.

But for some, especially those who foresaw the change, this period would one day be looked back upon as the time when their fortunes changed dramatically for the better.

Until recently, those who have predicted this dramatic change have been largely derided as “doom-and-gloomers” or “end-of-the-world crackpots.”

And it has been difficult to argue against this view, as regardless of how accurately we might have described what was headed our way, it was impossible to put a date on the onset of the crisis.

I became convinced of its eventuality in 1999, due to economic and political developments that would result in collapse at some point.

But the speed at which this would occur, and what governments and others would do to delay the eventuality, could not be known in advance. The best I could do was to repeatedly advise that significant events would increase in velocity and magnitude the closer we came to the collapse.

At that time, significant events were occurring once or twice a year. Since then, the frequency has increased until, today, significant events are occurring almost daily.

For those who have been following this line of thinking, the basic premise is that the governments of the world, often acting in concert with the major banks and industries, have sought to expand their wealth and power in such a way that, at some point, socio-economic collapse would inevitably occur.

And they’ve succeeded marvelously. For decades, they’ve dramatically increased their positions but have reached the point at which the bill for the big party must be paid, and they have no intention of paying it. It will be passed to the hoi polloi, who will soon realise that it will break many of them.

And they’ll be hopping mad.

So, how should this moment be handled? Historically, the most effective means by which those who have caused the problem can not only get away with it, but profit even more from the collapse, is to create a distraction to take focus away from themselves as the guilty parties.

As any magician knows, there’s no real magic. The trick is achieved through illusion. For a simple magic trick, a small distraction is needed. For a more elaborate trick to be pulled off, a larger distraction is necessary.

But for a worldwide socio-economic collapse to be pulled off, whilst the magicians remain in place to benefit from it, a major distraction is necessary.

In fact, if at all possible, multiple distractions should be employed.

Beginning in the early months of 2020, a virus has spread across the globe.

This virus is familiar to science: It’s a mutation of the coronavirus, which has been around for sixty years or more. It’s a common virus, carrying with it relatively predictable symptoms and a relatively predictable outcome for those who contract it.

Actual deaths are few and will occur mostly in those who are old and infirm or whose immune systems are already compromised.

But from the start, this virus was treated as a plague that would decimate the population. Almost immediately, the death figures were greatly exaggerated. The US government even went so far as to award thousands of dollars to hospitals for each death that they attributed to the virus.

Whole countries have been locked down, with millions of people losing their means of employment. In every case, essential freedoms have been removed from all citizens.

At the same time, the death of a minor criminal at the hands of police sparked off a riot in a seemingly unrelated event.

But, courtesy of the media, the riots quickly spread across the US in forty of the fifty states. Incredibly, many mayors and governors stated that the rioters were justified in their unlawful actions and ordered police to stand down, exacerbating the riots.

To make matters worse, it soon became apparent that many of the rioters were bussed into cities, paid to cause as much destruction as possible. The funding for such groups has since been traced to plutocrats who created the problem in the first place.

In some locations, entire hotels in upper-class neighbourhoods have been taken over by state governments to house homeless people, including the criminals, drug addicts and prostitutes.

These events have become so widespread that whole neighbourhoods are emptying out of residents.

The upset and confusion increases each day, and the average person is now afraid to turn on the news each evening.

We’re witnessing a sleight of hand of epic proportions.

We’ve entered the crisis period. From here on in, wealth will be extracted from the populace on a wholesale level. In addition, “inalienable rights” are already becoming a thing of the past.

The uncertainty that the average person is finding himself in is not an illusion, but nor is it an accident.

Those who imagine that the Powers That Be exist to help them in their troubles will be the ones who are most dramatically damaged by this manufactured uncertainty, for they will live in the false hope that the very people who created the problem will come to their aid and save them.

Nothing could be further from the truth. Yet, just as those who are abused often turn to their abuser for protection, we shall see a populace that seeks salvation through a government upon which they bestow more power than before, not less.

* * *

The truth is, we’re on the cusp of a economic crisis that could eclipse anything we’ve seen before. And most people won’t be prepared for what’s coming. That’s exactly why bestselling author Doug Casey and his team just released a free report with all the details on how to survive an economic collapse. Click here to download the PDF now.

|

|

[Entertainment]

14 books to get you through summer and beyond

From Joseph Kanon's suspense to Casey McQuiston's romance, find your next favorite book in our summer reading roundup.

Published:7/27/2024 7:58:32 AM

|

|

[Democrats]

Bob Casey Praises Radical Admiral Rachel Levine's 'Deep Qualifications'

Sen. Bob Casey has largely avoided taking a public stance on the most controversial aspects of the debate over transgender issues. But the Pennsylvania Democrat was more forthcoming in a questionnaire seeking the endorsement of an LGBT group earlier this year, according to documents obtained by the Washington Free Beacon.

The post Bob Casey Praises Radical Admiral Rachel Levine's 'Deep Qualifications' appeared first on .

Published:7/26/2024 4:48:36 PM

|

|

[]

The Most Devastating Campaign Ad of the Cycle?

Published:7/24/2024 1:23:07 PM

|

[Markets]

Quinn: "They" Will Do Anything To Win

Quinn: "They" Will Do Anything To Win

Authored by Jim Quinn via The Burning Platform blog,

“We’ll know our disinformation program is complete when everything the American public believes is false.” – William J. Casey, CIA Director (1981)

The always mysterious question when trying to figure out what is happening in this insane world and why it is happening is who are “they”? In the current chaotic atmosphere, “they” are in the process of throwing their senile child sniffing pedophile Trojan horse president overboard because his dementia ridden brain has been laid bare for all the world to see. It isn’t just the Democratic Party throwing him to the wolves.

When you witness the party, supposedly loyal politicians, the regime media, surveillance state spooks, Hollywood celebrities, and globalist billionaires all simultaneously turn on the person they installed in 2020 through a rigged election, you realize the voting public have no say in how this country is run. Whether you refer to “they” as the Deep State, invisible government, ruling elite, globalist oligarchs, or shadowy men in smoke filled backrooms, we are just bit players in this surreal horror movie.

It is no longer a conspiracy theory that we are ruled by unelected men using their wealth to pull the levers of society to benefit themselves and the apparatchiks who do their dirty work. At some level, this type of control has existed since the inception of our country. Andrew Jackson’s tirade in the 1830s revealed there were deceitful men operating in the shadows, with their greedy agendas prevailing over what was best for the people.

“Gentlemen! I too have been a close observer of the doings of the Bank of the United States. I have had men watching you for a long time, and am convinced that you have used the funds of the bank to speculate in the breadstuffs of the country. When you won, you divided the profits amongst you, and when you lost, you charged it to the bank. You tell me that if I take the deposits from the bank and annul its charter I shall ruin ten thousand families. That may be true, gentlemen, but that is your sin! Should I let you go on, you will ruin fifty thousand families, and that would be my sin! You are a den of vipers and thieves. I have determined to rout you out, and by the Eternal, (bringing his fist down on the table) I will rout you out!”

The capture of our government, media and financial system accelerated during the last century as described by Edward Bernays in his 1928 book – Propaganda. Of course, he wrote this fifteen years after the capture of our financial system, with the Creature from Jekyll Island – the Federal Reserve being created by a cabal of bankers and politicians intent on lining their pockets.

“The conscious and intelligent manipulation of the organized habits and opinions of the masses is an important element in democratic society. Those who manipulate this unseen mechanism of society constitute an invisible government which is the true ruling power of our country. …We are governed, our minds are molded, our tastes formed, our ideas suggested, largely by men we have never heard of. This is a logical result of the way in which our democratic society is organized. Vast numbers of human beings must cooperate in this manner if they are to live together as a smoothly functioning society. …In almost every act of our daily lives, whether in the sphere of politics or business, in our social conduct or our ethical thinking, we are dominated by the relatively small number of persons… who understand the mental processes and social patterns of the masses. It is they who pull the wires which control the public mind.”– Edward Bernays – Propaganda (1928) pp. 9–10

The traitorous president who signed the bill creating the Federal Reserve, in the same year he signed into existence the loathsome income tax, and later promised to keep the U.S. out of World War I, before taking us into that war, supposedly regretted handing over control of the government to a small cabal of dishonorable men. Politicians and Wall Street bankers have been using the debasement of our currency to enrich themselves at our expense, ever since.

“I am a most unhappy man. I have unwittingly ruined my country. A great industrial nation is controlled by its system of credit. Our system of credit is concentrated. The growth of the nation, therefore, and all our activities are in the hands of a few men. We have come to be one of the worst ruled, one of the most completely controlled and dominated Governments in the civilized world — no longer a Government by free opinion, no longer a Government by conviction and the vote of the majority, but a Government by the opinion and duress of a small group of dominant men.” – Woodrow Wilson – 1919

For decades the Deep State was able to remain hidden, working in the shadows behind the scenes, with the general population oblivious to their existence. But their existence was outed when they assassinated John F. Kennedy in front of the entire world and took out their CIA patsy on national TV. The CIA, along with their media mouthpieces, created the term “conspiracy theorist” as a derogatory term to keep the public sedated and onboard with whatever narrative they were selling.

The Church Committee in the mid-1970s further revealed the depth and breadth of the traitorous activities of the CIA and the surveillance state bureaucracy. Mike Lofgren defined the Deep State in his 2016 book, describing how the consent of the governed has been nothing more than a fantasy for many decades in this country.

“I have come to call this shadow government the Deep State…a hybrid association of key elements of government and parts of top-level finance and industry that is effectively able to govern the United States with only limited reference to the consent of the governed as normally expressed through elections” ? Mike Lofgren, The Deep State: The Fall of the Constitution and the Rise of a Shadow Government

Still, anyone questioning the officially approved narrative, as propagated by the bought off politicians; captured propaganda spewing regime media; paid-off “experts” in academia, finance, the military and the sciences; globalist organizations; and billionaires with fake foundations, was ridiculed and censored as nutjob conspiracy theorists.

But, since Trump’s unlikely rise to power in 2016 and the subsequent traitorous acts by the Deep State and their lackeys in the government, media, and surveillance state bureaus, in attempting to destroy Trump through the fake Russiagate investigations, two impeachments based on nothing, creating a fake pandemic to rig the 2020 election, instigating a fake insurrection to keep him from running again, using the power of the DOJ to bring multiple fake charges against him, raiding his residence with orders to shoot if necessary, allowing millions of illegals to enter the country and encouraging them to vote illegally to throw the 2024 election, vilifying him as a threat worse than Hitler, and now being exposed using a patsy to try and assassinate him while his Secret Service detail pretended to protect him.

There should be no doubt in anyone’s mind a Deep State/Invisible government is running the show, and we are all nothing more than spectators who are brainwashed into doing what we are told by those who “have our best interests at heart”. After they failed by one inch in blowing Trump’s head off (ala JFK), the narrative being spun is that we all must unite and tone down the vitriol. It is too late, and they know it. This is all for show.

We are now at war with the Deep State, and anyone associated with their criminal deeds. Their need to retain power grows more desperate by the day. They have concluded they must win the upcoming election at all costs, or their unlawful acts over the last eight years will be revealed and retribution doled out to the perpetrators. Their failure in assassinating Trump, does not mean they will not try again.

Their biggest hurdle is their ballot rigging operation only works in the swing states when the vote is reasonably close. The presidential debate and subsequent embarrassing press conferences have revealed Biden to be dementia ridden husk, who can’t even read a teleprompter at this point. Only the most brain-dead liberal kooks will vote for this walking dead candidate.

After Trump’s triumphant post assassination attempt photo-op, with fist raised in victory, his odds of winning the election went through the roof. The potential replacement candidates for Biden are a motley crew of idiocy, failure and low IQ diversity options. When the Deep State was blindsided in 2016 with the Trump victory, they proceeded to derail his presidency by convincing him to hire their Deep State operatives into key roles, while keeping the Congress, DOJ, DOD, FBI, and CIA at war with everything Trump tried to accomplish. If he wins this time, he won’t make the same miscalculations again. And they know it.

We have less than four months until the election. These being the waning years of this Fourth Turning, whatever happens from here on out will only be intensified. There will be no unity. There will be no compromise. Fourth Turnings do not wind down. They accelerate towards a bloody crescendo, with clear winners and losers. The potential for civil and global war rises on a daily basis.

Most of the ignorant, I-gadget distracted, narrative believing masses, have no clue how close the world is to erupting in violent no-holds barred death and destruction. Virtually no one is alive who remembers the horrors of WWII. That is why Fourth Turnings re-occur every eighty years or so. We forget the past and are condemned to relive it. There are several scenarios that could play out.

The Deep State may have provided Trump with a stark reminder about who really runs the show. Will he heed their warning? If so, they may let him win and then continue their control behind the scenes, letting him know they can take him out at a time of their choosing. Trump’s cooperation in this scenario is possible, but after the last eight years of unbridled attacks on him, this may be unlikely.

They could try another assassination attempt, but the world is now aware of their hatred for Trump, and would likely react negatively towards all parties involved in the murder of the past and future president of the U.S. The question is how desperate are these godless authoritarians? How many innocent people are they willing to sacrifice at the altar of mammon and power, to continue their stranglehold on the political, financial, social, and economic structures of the U.S. and western world.

Knowing they will do anything to win and remain in control of the levers of our society, what may seem unthinkable to you and me, wouldn’t make these sociopaths pause for a second. They’ve already killed tens of millions with their fake pandemic and their toxic antidote gene therapy. All done to rig an election, enrich themselves, and cull the herd. We already know the neo-con branch of the Deep State has been saber rattling towards both Russia and China.

We also know false flags are one of their favorite tools to initiate conflict and fill their coffers with blood money. Our blood and their money. We’ve already seen a possible scenario with their puppet Zelensky in the Ukraine. If you can create a national emergency through either a civil or foreign war, you can cancel the election until the emergency is over.

A successful assassination of Trump this past weekend would have initiated some form of chaos in the streets, with the political powder keg poised to be lit by one side or the other. The Deep State is certainly capable of creating a false flag that kills hundreds or thousands of innocents, blaming white nationalist Trumpers for the tragedy. Another George Floyd incident could be cooked up, with Soros unleashing his Antifa and BLM terrorist hordes on cities across the land.

The normal citizens supporting Trump will only be pushed so far, and if they begin to fight back, all hell could break loose. This is what the Deep State wants. Chaos and violence in the streets would be their excuse to “delay” the elections. That would even cause more violence and upheaval. The Deep State thrives on violence, chaos, war, and a fearful populace, begging to be saved.

The Deep State is conducting the war against Russia. The Ukrainian soldiers are being chewed up by the Russian army, but the high-tech aspects of the war are being carried out by Americans, who are the only ones capable of operating the drones and advanced missiles being used against Russia. They know Putin’s red lines. If they choose to start World War III, they will initiate an attack guaranteed to force Putin into a drastic response, attempting to ignite a conflagration across Europe, into the Middle East.

With the US/EU sanction war having pushed Russia, China and the rest of the BRIC countries closer together, China and possibly India would side with Russia in a global conflict. The use of nuclear arms becomes more and more likely as the warring countries become more desperate and pushed into a corner.

The Deep State has no qualms about killing millions to attain their goals and retain their wealth, power and control over the masses. I do believe the next four months will mark a turning point in the history of the world. I fear there will be much bloodshed, but will continue to try and decipher the path of this Fourth Turning and guide my family and anyone choosing to listen towards the light and away from the darkness. I do believe Strauss & Howe’s possible outcomes for this Fourth Turning are an accurate portrayal of what awaits us within the next decade.

-

This Fourth Turning could mark the end of man. It could be an omnicidal Armageddon, destroying everything, leaving nothing. If mankind ever extinguishes itself, this will probably happen when its dominant civilization triggers a Fourth Turning that ends horribly. For this Fourth Turning to put an end to all this would require an extremely unlikely blend of social disaster, human malevolence, technological perfection and bad luck.

-

The Fourth Turning could mark the end of modernity. The Western saecular rythm – which began in the mid-fifteenth century with the Renaissance – could come to an abrupt terminus. The seventh modern saeculum would be the last. This too could come from total war, terrible but not final. There could be a complete collapse of science, culture, politics, and society. Such a dire result would probably happen only when a dominant nation (like today’s America) lets a Fourth Turning ekpyrosis engulf the planet. But this outcome is well within the reach of foreseeable technology and malevolence.

-

The Fourth Turning could spare modernity but mark the end of our nation. It could close the book on the political constitution, popular culture, and moral standing that the word America has come to signify. The nation has endured for three saecula; Rome lasted twelve, the Soviet Union only one. Fourth Turnings are critical thresholds for national survival. Each of the last three American Crises produced moments of extreme danger: In the Revolution, the very birth of the republic hung by a thread in more than one battle. In the Civil War, the union barely survived a four-year slaughter that in its own time was regarded as the most lethal war in history. In World War II, the nation destroyed an enemy of democracy that for a time was winning; had the enemy won, America might have itself been destroyed. In all likelihood, the next Crisis will present the nation with a threat and a consequence on a similar scale.

-

Or the Fourth Turning could simply mark the end of the Millennial Saeculum. Mankind, modernity, and America would all persevere. Afterward, there would be a new mood, a new High, and a new saeculum. America would be reborn. But, reborn, it would not be the same.